Kubernetes in Europe: Can European Cloud Providers Compete with the American Giants?

When I first started thinking about this blog, one big question kept coming up: Why? Not “why bother,” but why Europe, why Kubernetes, and most importantly, why managed Kubernetes in Europe?

Why Europe

There’s been a growing interest in moving data and compute back to European clouds. If you’re curious about why this shift is happening, check out this post for more on the industry’s wake-up call. Over the last couple of years, though, we’ve all gotten used to the convenience, reliability, and scale of the big American cloud providers like AWS, Azure, and GCP. The idea of going back to managing individual servers feels like a step backward for most DevOps teams. With a new wave of European cloud providers focused on sovereignty and subject to European law, maybe we can finally have our data close to home—without giving up the cloud benefits we’ve come to expect.

Why Managed Kubernetes

Kubernetes has become the universal language of cloud infrastructure. Its open ecosystem and wide adoption mean teams can focus on building, without worrying much about the underlying servers. Managed Kubernetes, in particular, strikes a great balance: less operational overhead, but still all the flexibility and familiar tooling like Terraform, kubectl, and Helm. And with open standards, there’s less risk of getting locked in.

Why Managed Kubernetes in Europe

That brings us to the heart of the matter: can European providers deliver a managed Kubernetes experience that’s not just technically sound, but actually ready for the realities of enterprise IT? It’s not enough to spin up clusters on demand. What matters is whether you can plug into your SSO, connect to managed databases, set up monitoring that works with your stack, and get support when things break. Are European platforms still just playing catch-up, or are they starting to carve out their own strengths, shaped by local laws and the real-world needs of teams in Europe?

To get a real answer, I decided to go beyond the documentation and put these platforms to the test. I built a fully automated benchmarking pipeline to see not just if they work, but how well they work, and where the differences really show up when you’re building for the real world.

Benchmarking the cloud providers

We are comparing four cloud providers in this study: Azure, OVHcloud, StackIt, and Hetzner. They can be subdivided into three broad categories: the American, the European cloud service providers, and the IaaS provider.

I built a GitHub Actions pipeline that reuses the same steps for every provider, except for the infrastructure and configuration part. Each cloud has its own Terraform provider, so that job is different for each one. Azure and StackIt were the easiest: after running Terraform, all data was accessible and the clusters were ready to go. OVHcloud needed a quick script to fetch the pgAdmin credentials, since I couldn’t provide my own. Hetzner, being pure IaaS, required all the configuration after deployment.

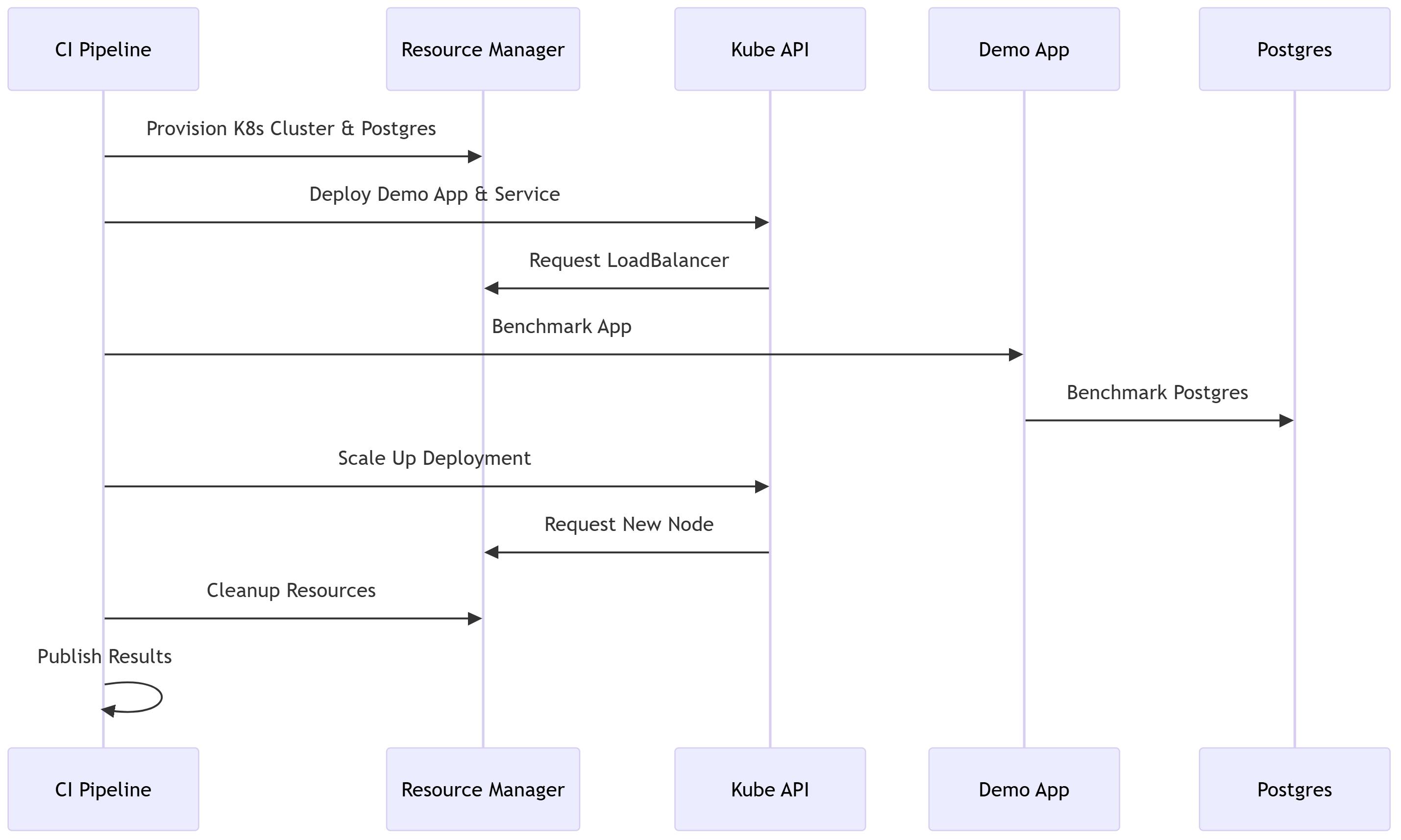

Once the cluster is ready, the pipeline continues in the same manner for all providers, a testament to the vendor agnostic nature of the Kubernetes platform layer. It deploys a demo application, then scales the deployment up until the node autoscaler is forced to add capacity. Throughout the process, the pipeline measures key metrics like provisioning time, scaling speed, and external IP availability. When the test is done, everything is cleaned up automatically so it can all start again.

The main architecture for each test consists of a Kubernetes cluster and a separate managed PostgreSQL database. Connectivity outside the cluster is handled over the public internet, though all providers do support private networking. For this benchmark, the focus is on compute and memory: by deploying a batch of pods at once, we quickly exhaust the resources on the node, forcing the system to provision another. Networking is left to the provider as much as possible. I simply provision a LoadBalancer service as part of the deployment and let the cloud provider figure out which components are needed behind the scenes.

Pipeline Process (Swimlane)

Note: For brevity, callbacks, metrics collection, and measurement steps are excluded from the swimlane diagram.

Hetzner is a bit of an outlier here. At the time of writing, there’s no such thing as a fully managed Kubernetes solution on Hetzner Cloud. Instead, I aimed to create an architecture in the same spirit, but sacrificed quite a bit in terms of manageability and stability. Unlike the other CSPs, on Hetzner I deployed two servers: one for the master node of the tiny k3s cluster, and one for the PostgreSQL server, using a collection of scripts and templates to configure the services. It’s definitely not as smooth as using a managed Kubernetes service. I had to install nginx, set up cluster autoscalers, link worker nodes to the cluster, and connect the cluster to Hetzner. These are steps that are completely superfluous in a managed environment.

Still, Hetzner is a popular choice for cost-conscious teams, so it deserved a spot in the lineup.

Metrics Collected

- Infrastructure deployment time: How quickly you can go from zero to a running cluster. Indicates how fast infrastructure changes can be made.

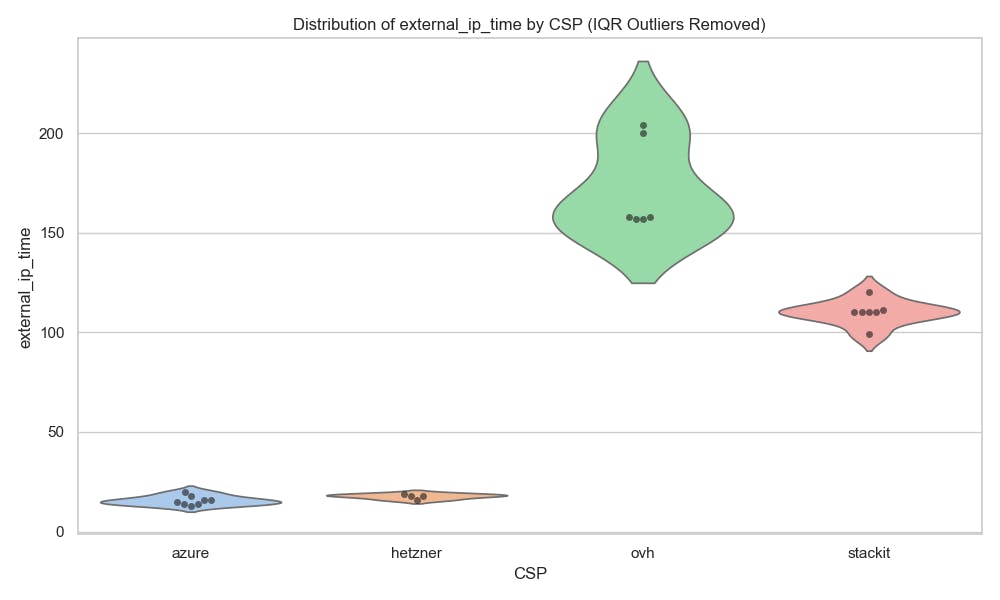

- External IP availability: How fast your services become accessible from the outside world. Useful for understanding exposure delays.

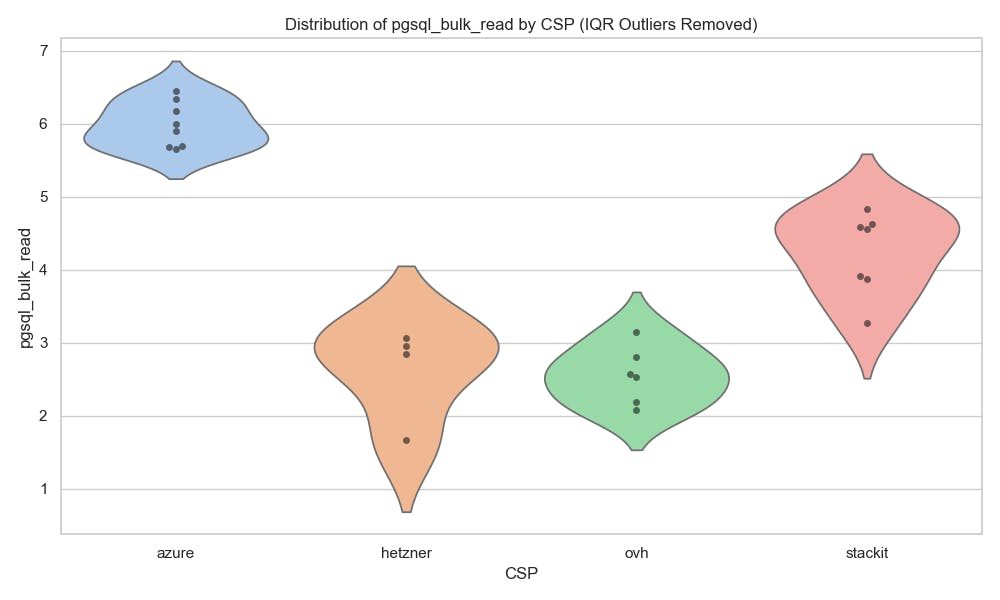

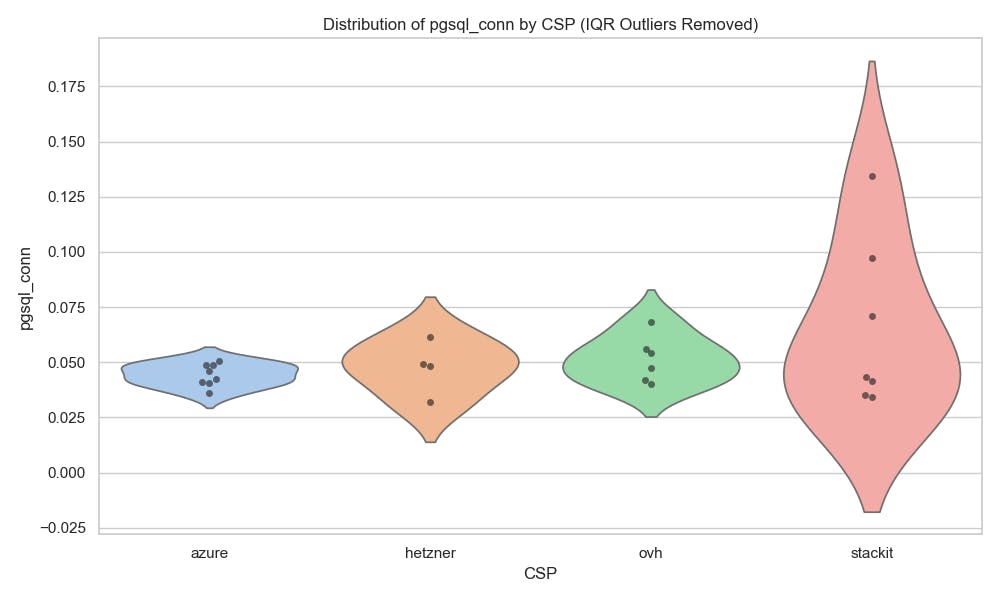

- PostgreSQL database performance: Measures the speed and consistency of managed database operations across providers.

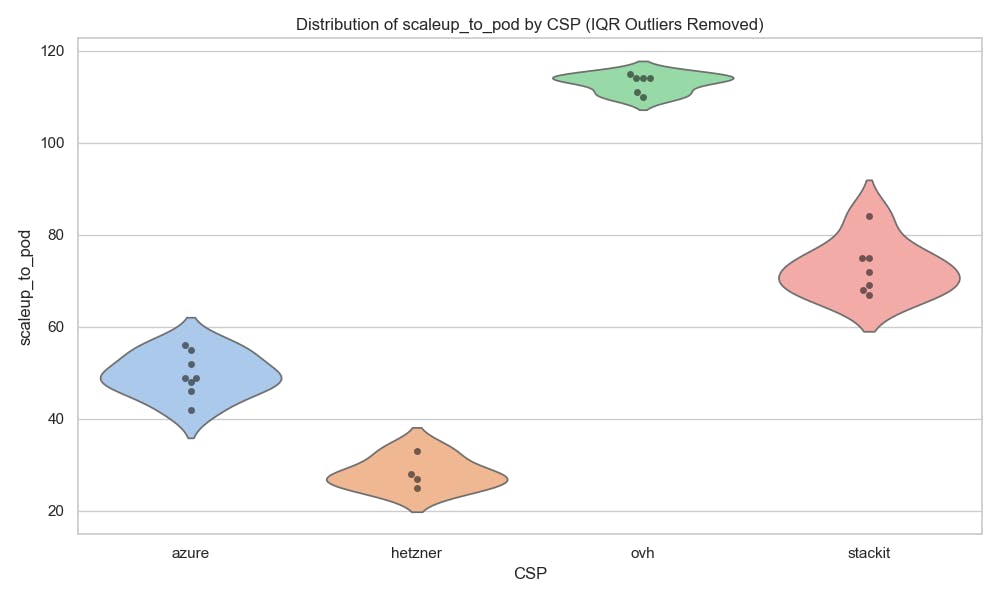

- Scaling efficiency: How well the platform handles scaling up workloads and adding capacity under load.

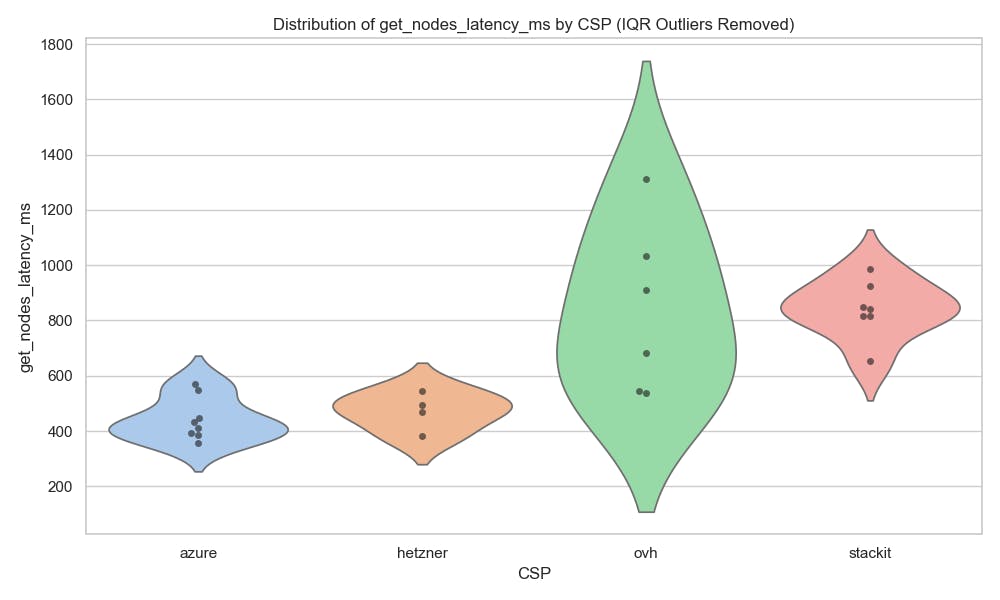

- Kubernetes API performance: Reflects the responsiveness of the Kubernetes control plane, impacting automation and daily operations.

Results

Before diving in the performance metrics I want to briefly look into the hosting costs of the different providers.

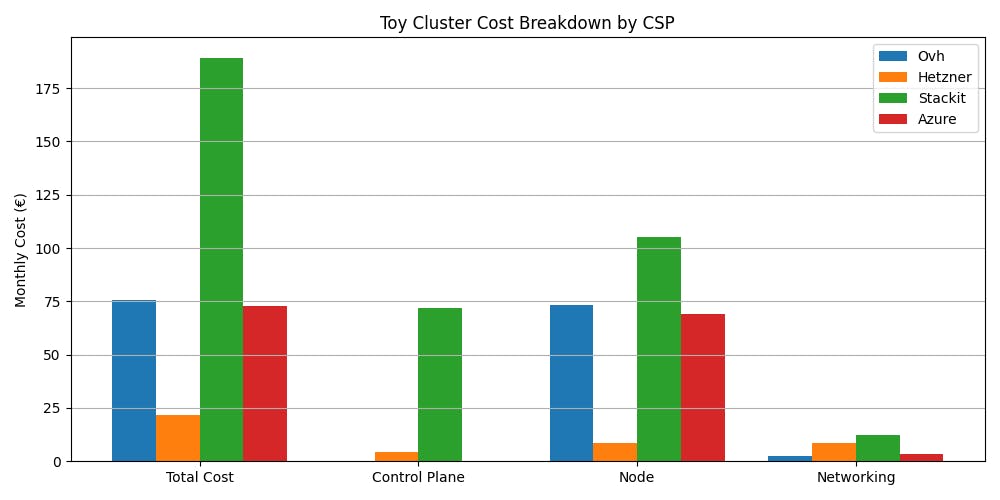

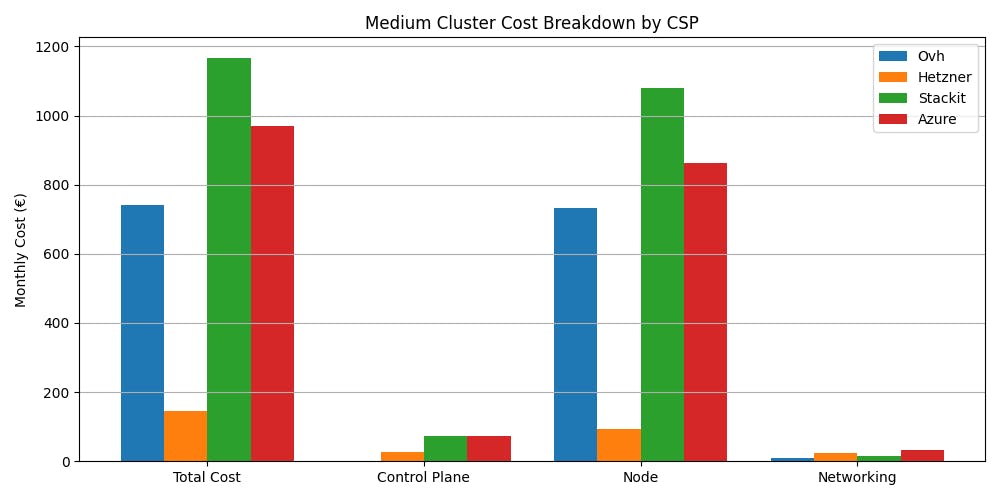

Cost comparison

Pricing models for cloud resources vary widely, and the differences are as important as the performance results. Here, I compare two typical scenarios: a small cluster with two worker nodes using the cheapest available components, and a medium cluster with 10 more powerful nodes and upgraded networking. For larger clusters, the cost structure generally scales in the same way, but keep in mind that for very large or enterprise-scale clusters, the advertised rates may no longer apply, as cloud providers often negotiate custom pricing or discounts at scale, so your actual costs could differ significantly from the public numbers.

What stands out?

- Hetzner is by far the cheapest, but you pay with your time and expertise—there’s no managed experience.

- OVHcloud is aggressively priced, especially for larger nodes, and the control plane is always free.

- Azure and StackIt are more expensive, but both offer a managed experience with additional features and support.

- StackIt’s control plane is the priciest, but this aligns with their business focus: as the German cloud for business, StackIt is designed for enterprise customers, not for hobbyists spinning up clusters for fun. For larger clusters, the control plane cost becomes less significant.

Ultimately, the best value depends on your needs: Hetzner is unbeatable for raw price, OVHcloud is a strong value for managed K8s, and Azure offer more features and support at a premium.

Benchmark results

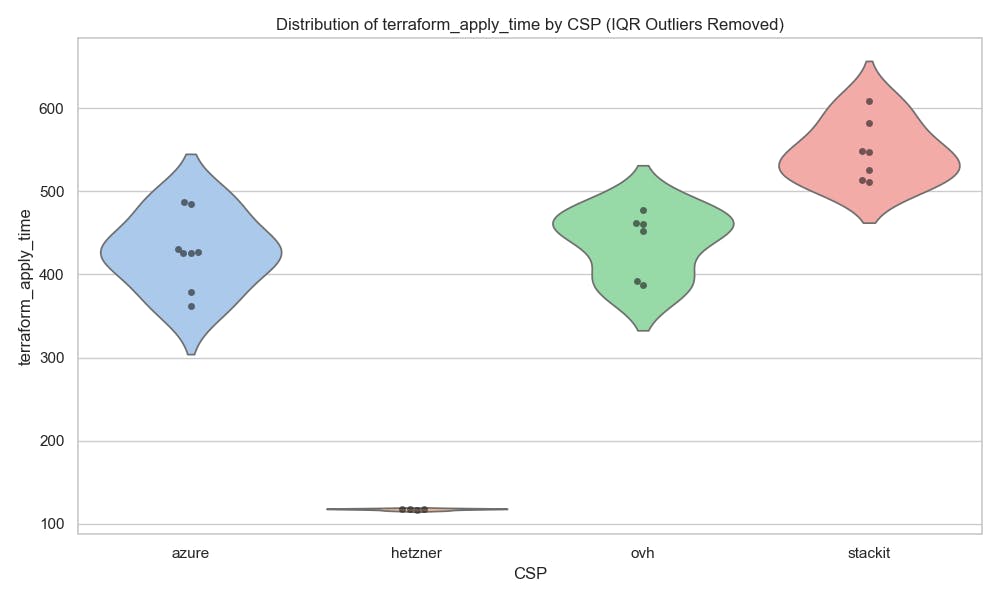

After running the automated benchmarks across Azure, StackIt, OVHcloud, and Hetzner, I ended up with 59 successful runs: 17 for Azure, 13 for Hetzner, 14 for OVHcloud, and 15 for StackIt. Each run captures how a provider performed on the key metrics. The following images illustrate the comparative results for each metric and provider:

Several things stand out here. First, Hetzner delivers very quick cluster provisioning, but with a big asterisk: this is a minimal, self-managed k3s setup, not a full-fledged managed Kubernetes service. That speed comes with extra manual work and less automation.

In terms of stability, Azure and StackIt were solid. The pipeline always worked as expected. OVHcloud, on the other hand, occasionally failed to expose the application when a public IP couldn't be provisioned. Hetzner presented a different challenge: as a casual user, resource limits are low and not immediately freed when resources are deleted. This, combined with my slightly unorthodox use case, led to several pipeline failures. However, Hetzner's faster runtimes compensated, so I still managed to complete a similar number of runs to the other providers.

When it comes to exposing applications, Azure is much quicker than its European managed counterparts, and it also leads the field in scaling up. Across these results, Azure frequently comes out on top, followed by StackIt, with OVHcloud often trailing. The most important takeaway, though, is that most results are consistent and predictable. As engineers, we can build around predictable performance, so this consistency is a real advantage.

Takeaway

One of the biggest challenges in cloud adoption is vendor lock-in. This is the risk that your infrastructure becomes so tied to a single provider’s ecosystem that moving or going multi-cloud turns into a major project. This is where open standards and tools like Kubernetes and Terraform shine. By using Kubernetes as your platform layer and Terraform for infrastructure as code, you gain some real portability. You can define your infrastructure and workloads in a way that is mostly provider-agnostic, making it much easier to switch clouds, run hybrid, or avoid being boxed in by a single vendor. Of course, “provider-agnostic” doesn’t mean frictionless; there are always edge cases and integration challenges to consider.

In practice, there are still many differences: for example, around managed services, networking, compliance, integrations, and identity and access management (IAM). But the core experience can be remarkably consistent. This consistency is a major win for teams that want flexibility and leverage in their cloud strategy.

Not all clouds are created equal, and in Hetzner’s case, we’re not even talking about the same kind of cloud service. Running a self-managed k3s cluster on Hetzner is a whole different ballgame compared to the polished, fully managed Kubernetes services from Azure, OVHcloud, and StackIt. Hetzner’s DIY approach is lightning fast and cost-effective, but comes with extra manual work, less stability, and more operational responsibility. Hetzner’s model suits cost-conscious, hands-on teams who enjoy tinkering and want to save money, while managed services are ideal for those prioritizing ease, support, and a hands-off experience.

Conclusion

This investigation is just a small technical slice of a much larger puzzle. While it’s fun (and useful!) to see how these European cloud providers stack up on raw Kubernetes performance and cost, that’s only part of the story. Enterprise readiness is about so much more: identity providers, compliance, integrations, edge compute, support, and all the other facets that make a cloud platform fit for your organization.

So how successful was I in escaping the Americans? In my quest to escape Microsoft’s yoke by moving to our new European messiahs, my employee accounts are hosted by Google, my repository and pipelines live on Microsoft’s new acquisition GitHub, and while Visual Studio Code is open source, it’s also owned by Microsoft. The laptop I’m writing this on? Windows, of course. Decoupling from these ecosystems is going to be a long road and it’s not just about technology, but about making hard choices at every level: tools, platforms, habits, and even organizational culture. The path to true digital sovereignty will be gradual, expensive, and messy. If you’re interested in exploring more European alternatives, check out european-alternatives.eu.

So, take these results for what they are: a fun peek into the world of European clouds and a snapshot of how far they’ve come in a short amount of time. The landscape is changing fast, and it’s exciting to see real alternatives emerging. If nothing else, I hope this inspires you to dig deeper, experiment, and keep an open mind about where you run your next cluster.